My venetian blinds, in black and white

I recently watched Josiah Parry’s wonderful video, “Making R 300x times faster!" It’s a great demonstration of how to rewrite code to be faster, and it’s worth your time. He rewrites some R code to be faster, then improves the speed again by writing some Rust code, which is called from R. He gets a 300 times speedup, which is really awesome.

Then someone writes in with an example of some code that is even faster than that, just using R code. It ends up being about 6 times faster than his Rust code. So a (300x6) 2000 times speed up. The main thing that helped with that was ensuring to vectorise your R code. Essentially, not working on the rows, but instead working on the columns.

Throughout the video Josiah makes good use of the bench R package to evaluate how much faster your code is. This idea is called “microbenchmarking”, and it involves running your code many times to evaluate how much faster it is than some other option. The reason you want to run your code many times is there is often variation around the runtimes in your code, so you don’t just want to base your improvements around a single measurement. It’s a general standard approach to attempt tp truly compare your approach to another.

All this being said, you should be wary of trying to make your code fast first without good reason. You want to make sure your code does the right thing first. Don’t just start trying to write performant code. Or as Donald Knuth says:

“The real problem is that programmers have spent far too much time worrying about efficiency in the wrong places and at the wrong times; premature optimization is the root of all evil (or at least most of it) in programming.”.

If you want to learn more about how to speed up your code, I think it’s worthwhile reading up on the measuring performance chapter in Advanced R.

An example microbenchmark

Let’s take an example from the naniar package. I’ll give more detail of this story of this optimisation at the end of this section. For the moment, let’s say we want to get the number of missing values in a row of a data frame. We can do something like this:

library(dplyr)

#>

#> Attaching package: 'dplyr'

#> The following objects are masked from 'package:stats':

#>

#> filter, lag

#> The following objects are masked from 'package:base':

#>

#> intersect, setdiff, setequal, union

my_n_miss <- function(data){

data |>

rowwise() |>

mutate(

n_miss = sum(is.na(c_across(everything())))

) |>

ungroup()

}

my_n_miss(airquality)

#> # A tibble: 153 × 7

#> Ozone Solar.R Wind Temp Month Day n_miss

#> <int> <int> <dbl> <int> <int> <int> <int>

#> 1 41 190 7.4 67 5 1 0

#> 2 36 118 8 72 5 2 0

#> 3 12 149 12.6 74 5 3 0

#> 4 18 313 11.5 62 5 4 0

#> 5 NA NA 14.3 56 5 5 2

#> 6 28 NA 14.9 66 5 6 1

#> 7 23 299 8.6 65 5 7 0

#> 8 19 99 13.8 59 5 8 0

#> 9 8 19 20.1 61 5 9 0

#> 10 NA 194 8.6 69 5 10 1

#> # ℹ 143 more rows

But we can speed this up using rowSums() instead:

new_n_miss <- function(data){

n_misses <- rowSums(is.na(data))

data |>

mutate(

n_miss = n_misses

) |>

as_tibble()

}

new_n_miss(airquality)

#> # A tibble: 153 × 7

#> Ozone Solar.R Wind Temp Month Day n_miss

#> <int> <int> <dbl> <int> <int> <int> <dbl>

#> 1 41 190 7.4 67 5 1 0

#> 2 36 118 8 72 5 2 0

#> 3 12 149 12.6 74 5 3 0

#> 4 18 313 11.5 62 5 4 0

#> 5 NA NA 14.3 56 5 5 2

#> 6 28 NA 14.9 66 5 6 1

#> 7 23 299 8.6 65 5 7 0

#> 8 19 99 13.8 59 5 8 0

#> 9 8 19 20.1 61 5 9 0

#> 10 NA 194 8.6 69 5 10 1

#> # ℹ 143 more rows

my_n_miss(airquality)

#> # A tibble: 153 × 7

#> Ozone Solar.R Wind Temp Month Day n_miss

#> <int> <int> <dbl> <int> <int> <int> <int>

#> 1 41 190 7.4 67 5 1 0

#> 2 36 118 8 72 5 2 0

#> 3 12 149 12.6 74 5 3 0

#> 4 18 313 11.5 62 5 4 0

#> 5 NA NA 14.3 56 5 5 2

#> 6 28 NA 14.9 66 5 6 1

#> 7 23 299 8.6 65 5 7 0

#> 8 19 99 13.8 59 5 8 0

#> 9 8 19 20.1 61 5 9 0

#> 10 NA 194 8.6 69 5 10 1

#> # ℹ 143 more rows

We can measure the speed using bench::mark():

library(bench)

bm <- mark(

old = my_n_miss(airquality),

new = new_n_miss(airquality)

)

bm

#> # A tibble: 2 × 6

#> expression min median `itr/sec` mem_alloc `gc/sec`

#> <bch:expr> <bch:tm> <bch:tm> <dbl> <bch:byt> <dbl>

#> 1 old 23.9ms 23.9ms 41.8 355.5KB 334.

#> 2 new 347.5µs 384.5µs 2385. 81.8KB 34.8

This runs the code at least twice, and prints out the amount of time it takes to run the code provided on the right hand side of “old” and “new”. But you can name them whatever you want.

Now, it can be kind of hard to see just how much faster this is, if you just look at comparing the times, as the times are given here in…well, actually I’m not sure why our friend from the greek alphabet mu, µ, from the greek alphabet is here, actually? If, like me, you needed to double check the standard measures of order of magnitude wiki page, you might not know that “ms” means milli - or one thousandth, and µ means “micro”, or one millionth. The point is that the new one is many times faster than the old one.

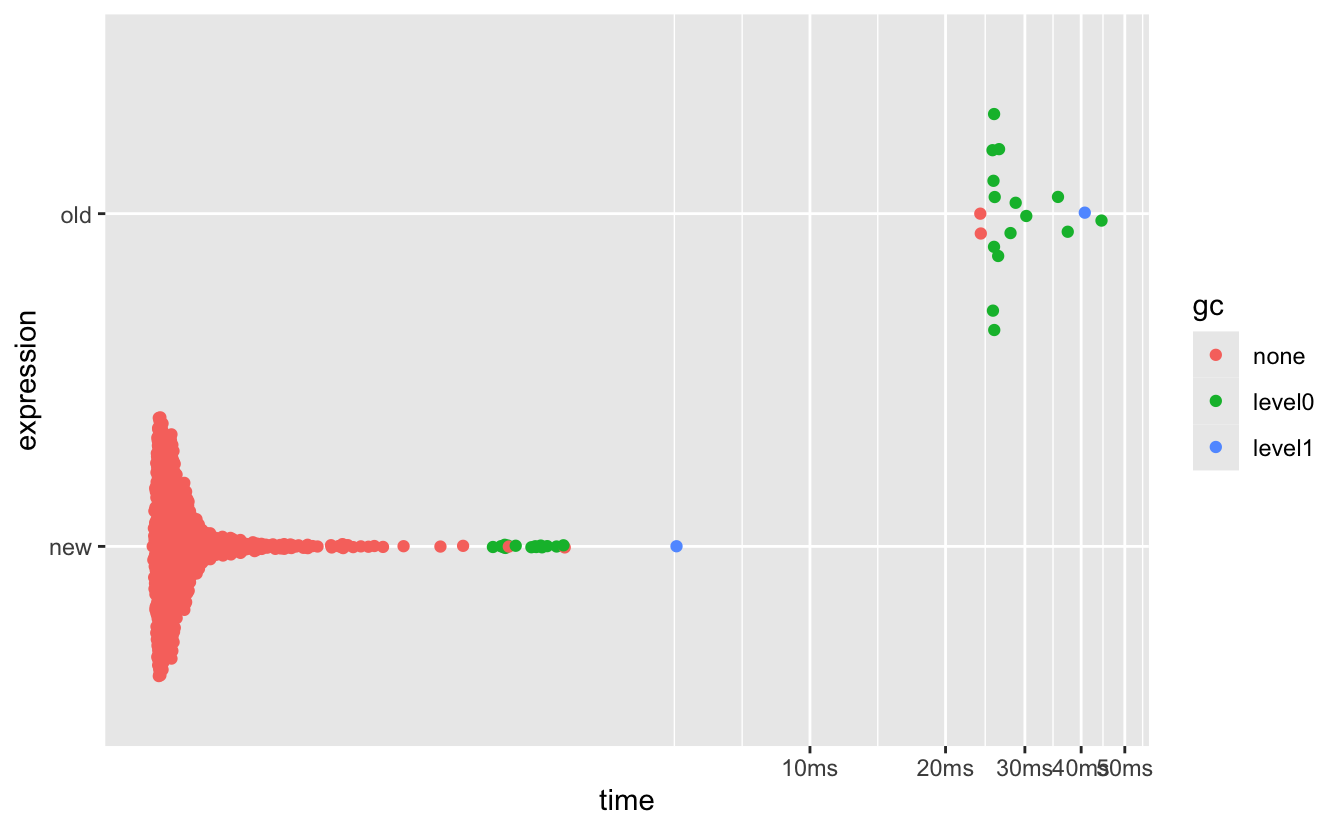

We can do a plot to help see this:

plot(bm)

#> Loading required namespace: tidyr

So we can see that the new one really is a lot faster.

But if I just want to be able to say something like:

It is XX times faster

then we can use the (somewhat unknown?) relative = TRUE option of bench’s S3 method for summary method:

summary(bm, relative = TRUE)

#> # A tibble: 2 × 6

#> expression min median `itr/sec` mem_alloc `gc/sec`

#> <bch:expr> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 old 68.7 62.2 1 4.34 9.62

#> 2 new 1 1 57.0 1 1

And this is great, from this we can see it is about 60 times faster. And that the old approach uses 15 times more memory.

The story behind this speedup in naniar.

Now, I didn’t just come up with a speedup for missing values on the fly. The story here is that there was going to be some (very generous) improvements to the naniar package from Romain François in the form of C++ code. However, Jim Hester suggested some changes, I think twitter (which I can’t find anymore), and he then kindly submitted a pull request showing that rowSums in R ends up being plenty fast.

This is a similar story to Josiah’s, where he used Rust code to get it faster, but then there was a faster way just staying within R.

Sometimes, you don’t need extra C or Fortran or Rust. R is enough!

And if you want to be able to compare the speeds of things, don’t forget the relative = TRUE argument in summary when using bench::mark.

Other packages for microbenchmarking

bench isn’t the only way to measure things! Other ones I’ve enjoyed using in the past are microbenchmark and tictoc. I’ve particularly enjoyed tictoc because you get to do this:

library(tictoc)

tic()

new_n_miss(airquality)

#> # A tibble: 153 × 7

#> Ozone Solar.R Wind Temp Month Day n_miss

#> <int> <int> <dbl> <int> <int> <int> <dbl>

#> 1 41 190 7.4 67 5 1 0

#> 2 36 118 8 72 5 2 0

#> 3 12 149 12.6 74 5 3 0

#> 4 18 313 11.5 62 5 4 0

#> 5 NA NA 14.3 56 5 5 2

#> 6 28 NA 14.9 66 5 6 1

#> 7 23 299 8.6 65 5 7 0

#> 8 19 99 13.8 59 5 8 0

#> 9 8 19 20.1 61 5 9 0

#> 10 NA 194 8.6 69 5 10 1

#> # ℹ 143 more rows

toc()

#> 0.01 sec elapsed

Which feels a bit nicer than using system.time():

system.time({

new_n_miss(airquality)

})

#> user system elapsed

#> 0.001 0.000 0.002

Also, notice that those two times are different? This is why we use benchmarking, to run those checks many times!

End

And that’s it, that’s the blog post. The relative = TRUE option in mark is super neat, and I don’t think many people know about it. Thanks again to Jim Hester for originally creating the bench package.